Convection initiation in Sierras de Cordoba

Overview

We will look at the 2019-01-29 convective storm near the Sierras de Cordoba

Prerequisites

Concepts |

Importance |

Notes |

|---|---|---|

Necessary |

||

Helpful |

Familiarity with metadata structure |

Time to learn: 10 minutes

Imports

Let’s import our required libraries

import act

import xarray as xr

import cmweather

import matplotlib.pyplot as plt

import numpy as np

import pyart

import hvplot.xarray

import holoviews as hv

import glob

import xradar as xd

import cartopy.crs as ccrs

from dask.distributed import Client, LocalCluster

from functools import partial

hv.extension("bokeh")

## You are using the Python ARM Radar Toolkit (Py-ART), an open source

## library for working with weather radar data. Py-ART is partly

## supported by the U.S. Department of Energy as part of the Atmospheric

## Radiation Measurement (ARM) Climate Research Facility, an Office of

## Science user facility.

##

## If you use this software to prepare a publication, please cite:

##

## JJ Helmus and SM Collis, JORS 2016, doi: 10.5334/jors.119

Dask Cluster

Let’s spin up our local cluster using Dask

client = Client(LocalCluster())

Data acquisition

Then, we can use the Atmospheric Data Community Toolkit ACT to easily download the data. The act.discovery.download_arm_data module will allow us to download the data directly to our computer. Watch for the data citation! Show some support for ARM’s instrument experts and cite their data if you use it in a publication.

username = "XXXXXX" ## Use your ARM username

token = "XXXXX" ### Use your ARM token (https://sso.arm.gov/arm/login?service=https%3A%2F%2Fadc.arm.gov%2Farmlive%2Flogin%2Fcas)

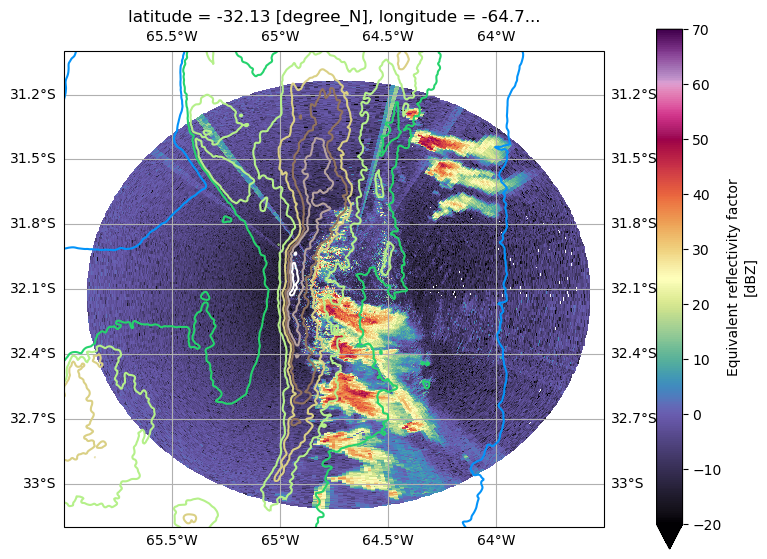

We are going to analyze data from the C-band Scanning ARM Precipitation Radar CSARP and the Ka-band ARM Zenith Radar KZAR. Data from both sensors can be found at the ARM Data Discovery portal under the porta data tab. Then, we can filter data by date, sensor, and field campaign. Once the desired data is located, we can get the identification. In our case, for the CSARP will be corcsapr2cmacppiM1.c1 and for KZAR corarsclkazr1kolliasM1.c1

# Set the datastream and start/enddates

kzar = 'corarsclkazr1kolliasM1.c1'

csarp = "corcsapr2cmacppiM1.c1"

startdate = '2019-01-29T13:00:00'

enddate = '2019-01-29T20:00:00'

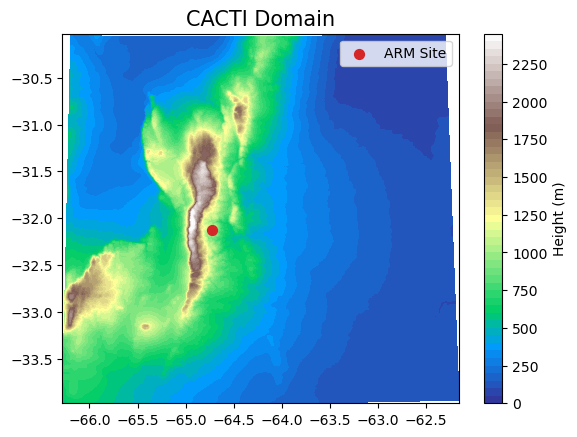

To examine the CACTI domain, we’ll read in one timestep from the LASSO simulations and plot the terrain.

path_staging = "/data/project/ARM_Summer_School_2024_Data/lasso_tutorial/cacti/lasso-cacti" # path on Jupyter

file_list = sorted(glob.glob(f'{path_staging}/20190129/eda09/base/les/subset_d3/corlasso_met_*'))

ds = xr.open_dataset(file_list[50])

ds

<xarray.Dataset> Size: 6GB

Dimensions: (Time: 1, south_north: 865, west_east: 750,

bottom_top: 149)

Coordinates:

* Time (Time) datetime64[ns] 8B 2019-01-29T18:30:00

XLONG (south_north, west_east) float32 3MB ...

XLAT (south_north, west_east) float32 3MB ...

XTIME (Time) float32 4B ...

Dimensions without coordinates: south_north, west_east, bottom_top

Data variables: (12/52)

ITIMESTEP (Time) int32 4B ...

MUTOT (Time, south_north, west_east) float32 3MB ...

HGT (Time, south_north, west_east) float32 3MB ...

HAMSL (Time, bottom_top, south_north, west_east) float32 387MB ...

P_HYD (Time, bottom_top, south_north, west_east) float32 387MB ...

PRESSURE (Time, bottom_top, south_north, west_east) float32 387MB ...

... ...

MULFC (Time, south_north, west_east) float32 3MB ...

MULNB (Time, south_north, west_east) float32 3MB ...

MULPL (Time, south_north, west_east) float32 3MB ...

MUCAPE (Time, south_north, west_east) float32 3MB ...

MUCIN (Time, south_north, west_east) float32 3MB ...

REFL_10CM_MAX (Time, south_north, west_east) float32 3MB ...

Attributes: (12/39)

DX: 500.0

DY: 500.0

SIMULATION_START_DATE: 2019-01-29_06:00:00

WEST-EAST_GRID_DIMENSION: 751

SOUTH-NORTH_GRID_DIMENSION: 866

BOTTOM-TOP_GRID_DIMENSION: 150

... ...

doi_isPartOf_lasso-cacti: https://doi.org/10.5439/1905789

doi_isDocumentedBy: https://doi.org/10.2172/1905845

doi_thisFileType: https://doi.org/10.5439/1905819

history: processed by user d3m088 on machine cirrus28...

filename_user: corlasso_met_2019012900eda09d3_base_M1.m1.20...

filename_storage: corlassomet2019012900eda09d3baseM1.m1.201901...- Time: 1

- south_north: 865

- west_east: 750

- bottom_top: 149

- Time(Time)datetime64[ns]2019-01-29T18:30:00

array(['2019-01-29T18:30:00.000000000'], dtype='datetime64[ns]')

- XLONG(south_north, west_east)float32...

[648750 values with dtype=float32]

- XLAT(south_north, west_east)float32...

[648750 values with dtype=float32]

- XTIME(Time)float32...

[1 values with dtype=float32]

- ITIMESTEP(Time)int32...

- description :

- WRF timestep number since run start

- units :

[1 values with dtype=int32]

- MUTOT(Time, south_north, west_east)float32...

- description :

- dry air mass in column

- units :

- Pa

- stagger :

[648750 values with dtype=float32]

- HGT(Time, south_north, west_east)float32...

- description :

- Terrain Height

- units :

- m

- stagger :

[648750 values with dtype=float32]

- HAMSL(Time, bottom_top, south_north, west_east)float32...

- description :

- model height - [MSL] (mass grid)

- units :

- m

- stagger :

[96663750 values with dtype=float32]

- P_HYD(Time, bottom_top, south_north, west_east)float32...

- description :

- hydrostatic pressure

- units :

- Pa

- stagger :

[96663750 values with dtype=float32]

- PRESSURE(Time, bottom_top, south_north, west_east)float32...

- description :

- pressure

- units :

- hPa

- stagger :

[96663750 values with dtype=float32]

- ALT(Time, bottom_top, south_north, west_east)float32...

- description :

- inverse density

- units :

- m3 kg-1

- stagger :

[96663750 values with dtype=float32]

- TEMPERATURE(Time, bottom_top, south_north, west_east)float32...

- description :

- temperature

- units :

- K

- stagger :

[96663750 values with dtype=float32]

- THETA(Time, bottom_top, south_north, west_east)float32...

- description :

- potential temperature

- units :

- K

- stagger :

[96663750 values with dtype=float32]

- THETA_E(Time, bottom_top, south_north, west_east)float32...

- description :

- equivalent potential temperature

- units :

- K

- stagger :

[96663750 values with dtype=float32]

- QVAPOR(Time, bottom_top, south_north, west_east)float32...

- description :

- Water vapor mixing ratio

- units :

- kg kg-1

- stagger :

[96663750 values with dtype=float32]

- RH(Time, bottom_top, south_north, west_east)float32...

- description :

- relative humidity

- units :

- %

- stagger :

[96663750 values with dtype=float32]

- UA(Time, bottom_top, south_north, west_east)float32...

- description :

- destaggered u-wind component

- units :

- m s-1

- stagger :

[96663750 values with dtype=float32]

- VA(Time, bottom_top, south_north, west_east)float32...

- description :

- destaggered v-wind component

- units :

- m s-1

- stagger :

[96663750 values with dtype=float32]

- WA(Time, bottom_top, south_north, west_east)float32...

- description :

- destaggered w-wind component

- units :

- m s-1

- stagger :

[96663750 values with dtype=float32]

- UMET(Time, bottom_top, south_north, west_east)float32...

- description :

- earth-relative u-wind component

- units :

- m s-1

- stagger :

[96663750 values with dtype=float32]

- VMET(Time, bottom_top, south_north, west_east)float32...

- description :

- earth-relative v-wind component

- units :

- m s-1

- stagger :

[96663750 values with dtype=float32]

- POTVORT(Time, bottom_top, south_north, west_east)float32...

- description :

- potential vorticity

- units :

- PVU

- stagger :

[96663750 values with dtype=float32]

- PSFC(Time, south_north, west_east)float32...

- description :

- SFC PRESSURE

- units :

- Pa

- stagger :

[648750 values with dtype=float32]

- SLP(Time, south_north, west_east)float32...

- description :

- sea level pressure

- units :

- hPa

- stagger :

[648750 values with dtype=float32]

- Q2(Time, south_north, west_east)float32...

- description :

- QV at 2 M

- units :

- kg kg-1

- stagger :

[648750 values with dtype=float32]

- T2(Time, south_north, west_east)float32...

- description :

- TEMP at 2 M

- units :

- K

- stagger :

[648750 values with dtype=float32]

- TH2(Time, south_north, west_east)float32...

- description :

- POT TEMP at 2 M

- units :

- K

- stagger :

[648750 values with dtype=float32]

- U10(Time, south_north, west_east)float32...

- description :

- U at 10 M

- units :

- m s-1

- stagger :

[648750 values with dtype=float32]

- V10(Time, south_north, west_east)float32...

- description :

- V at 10 M

- units :

- m s-1

- stagger :

[648750 values with dtype=float32]

- UMET10(Time, south_north, west_east)float32...

- description :

- earth-relative 10-m u-wind component

- units :

- m s-1

- stagger :

[648750 values with dtype=float32]

- VMET10(Time, south_north, west_east)float32...

- description :

- earth-relative 10-m v-wind component

- units :

- m s-1

- stagger :

[648750 values with dtype=float32]

- SHEAR_MAG_SFC-TO-1KM(Time, south_north, west_east)float32...

- units :

- m s-1

- stagger :

- description :

- bulk wind-shear magnitude, sfc to 1000.0

[648750 values with dtype=float32]

- SHEAR_DIR_SFC-TO-1KM(Time, south_north, west_east)float32...

- units :

- degrees

- stagger :

- description :

- bulk wind-shear direction, sfc to 1000.0

[648750 values with dtype=float32]

- SHEAR_MAG_SFC-TO-3KM(Time, south_north, west_east)float32...

- units :

- m s-1

- stagger :

- description :

- bulk wind-shear magnitude, sfc to 3000.0

[648750 values with dtype=float32]

- SHEAR_DIR_SFC-TO-3KM(Time, south_north, west_east)float32...

- units :

- degrees

- stagger :

- description :

- bulk wind-shear direction, sfc to 3000.0

[648750 values with dtype=float32]

- SHEAR_MAG_SFC-TO-6KM(Time, south_north, west_east)float32...

- units :

- m s-1

- stagger :

- description :

- bulk wind-shear magnitude, sfc to 6000.0

[648750 values with dtype=float32]

- SHEAR_DIR_SFC-TO-6KM(Time, south_north, west_east)float32...

- units :

- degrees

- stagger :

- description :

- bulk wind-shear direction, sfc to 6000.0

[648750 values with dtype=float32]

- SHEAR_MAG_SFC-TO-9KM(Time, south_north, west_east)float32...

- units :

- m s-1

- stagger :

- description :

- bulk wind-shear magnitude, sfc to 9000.0

[648750 values with dtype=float32]

- SHEAR_DIR_SFC-TO-9KM(Time, south_north, west_east)float32...

- units :

- degrees

- stagger :

- description :

- bulk wind-shear direction, sfc to 9000.0

[648750 values with dtype=float32]

- RAINNC(Time, south_north, west_east)float32...

- description :

- ACCUMULATED TOTAL GRID SCALE PRECIPITATION

- units :

- mm

- stagger :

[648750 values with dtype=float32]

- SNOWNC(Time, south_north, west_east)float32...

- description :

- ACCUMULATED TOTAL GRID SCALE SNOW AND ICE

- units :

- mm

- stagger :

[648750 values with dtype=float32]

- GRAUPELNC(Time, south_north, west_east)float32...

- description :

- ACCUMULATED TOTAL GRID SCALE GRAUPEL

- units :

- mm

- stagger :

[648750 values with dtype=float32]

- SR(Time, south_north, west_east)float32...

- description :

- fraction of frozen precipitation

- units :

- -

- stagger :

[648750 values with dtype=float32]

- MLLCL(Time, south_north, west_east)float32...

- description :

- most-unstable lifting condensation level

- units :

- m

- stagger :

[648750 values with dtype=float32]

- MLLFC(Time, south_north, west_east)float32...

- description :

- most-unstable level of free convection

- units :

- m

- stagger :

[648750 values with dtype=float32]

- MLLNB(Time, south_north, west_east)float32...

- description :

- most-unstable level of neutral buoyancy

- units :

- m

- stagger :

[648750 values with dtype=float32]

- MLLPL(Time, south_north, west_east)float32...

- description :

- most-unstable lifted parcel level

- units :

- m

- stagger :

[648750 values with dtype=float32]

- MLCAPE(Time, south_north, west_east)float32...

- description :

- most-unstable convective available potential energy

- units :

- J kg-1

- stagger :

[648750 values with dtype=float32]

- MLCIN(Time, south_north, west_east)float32...

- description :

- most-unstable convective inhibition

- units :

- J kg-1

- stagger :

[648750 values with dtype=float32]

- MULCL(Time, south_north, west_east)float32...

- description :

- most-unstable lifting condensation level

- units :

- m

- stagger :

[648750 values with dtype=float32]

- MULFC(Time, south_north, west_east)float32...

- description :

- most-unstable level of free convection

- units :

- m

- stagger :

[648750 values with dtype=float32]

- MULNB(Time, south_north, west_east)float32...

- description :

- most-unstable level of neutral buoyancy

- units :

- m

- stagger :

[648750 values with dtype=float32]

- MULPL(Time, south_north, west_east)float32...

- description :

- most-unstable lifted parcel level

- units :

- m

- stagger :

[648750 values with dtype=float32]

- MUCAPE(Time, south_north, west_east)float32...

- description :

- most-unstable convective available potential energy

- units :

- J kg-1

- stagger :

[648750 values with dtype=float32]

- MUCIN(Time, south_north, west_east)float32...

- description :

- most-unstable convective inhibition

- units :

- J kg-1

- stagger :

[648750 values with dtype=float32]

- REFL_10CM_MAX(Time, south_north, west_east)float32...

- description :

- column-maximum radar reflectivity (lambda = 10 cm)

- units :

- dBZ

- stagger :

[648750 values with dtype=float32]

- TimePandasIndex

PandasIndex(DatetimeIndex(['2019-01-29 18:30:00'], dtype='datetime64[ns]', name='Time', freq=None))

- DX :

- 500.0

- DY :

- 500.0

- SIMULATION_START_DATE :

- 2019-01-29_06:00:00

- WEST-EAST_GRID_DIMENSION :

- 751

- SOUTH-NORTH_GRID_DIMENSION :

- 866

- BOTTOM-TOP_GRID_DIMENSION :

- 150

- GRIDTYPE :

- C

- CEN_LAT :

- -32.01111

- CEN_LON :

- -64.23425

- TRUELAT1 :

- -30.0

- TRUELAT2 :

- -60.0

- MOAD_CEN_LAT :

- -32.500015

- STAND_LON :

- -64.728

- POLE_LAT :

- 90.0

- POLE_LON :

- 0.0

- MAP_PROJ :

- 1

- MAP_PROJ_CHAR :

- Lambert Conformal

- run_name :

- eda09_2019012900_base

- model_type :

- WRF

- model_version :

- NCAR V4.3.1 + LASSO modifications

- model_source_repository :

- https://code.arm.gov/lasso/lasso-cacti/lasso-wrf-cacti/

- model_source_git_hash :

- 3e761eac

- subset_source_repository :

- https://code.arm.gov/lasso/lasso-cacti/subsetwrf

- subset_source_git_hash :

- 99902f2

- domain_num :

- 3

- input_source :

- /gpfs/wolf/cli120/world-shared/d3m088/cacti/staged_runs/20190129/eda09/base/les/out_d3

- site_id :

- cor

- platform_id :

- corlasso

- facility_designation :

- M1

- location_description :

- Cloud, Aerosol, and Complex Terrain Interactions (CACTI), Cordoba, Argentina

- data_level :

- m1

- datastream :

- corlasso_sub_met

- contact :

- lasso@arm.gov, LASSO PI: William Gustafson (PNNL, william.gustafson@pnnl.gov), LASSO Co-PI: Andrew Vogelmann (BNL)

- doi_isPartOf_lasso-cacti :

- https://doi.org/10.5439/1905789

- doi_isDocumentedBy :

- https://doi.org/10.2172/1905845

- doi_thisFileType :

- https://doi.org/10.5439/1905819

- history :

- processed by user d3m088 on machine cirrus28.ccs.ornl.gov at 23-Jan-2023 07:15 using subsetwrf.py

- filename_user :

- corlasso_met_2019012900eda09d3_base_M1.m1.20190129.183000.nc

- filename_storage :

- corlassomet2019012900eda09d3baseM1.m1.20190129.183000.nc

We’ll also plot the location of the CSAPR radar using the metadata from one of the radar files

files = sorted(glob.glob('corcsapr2cmacppiM1.c1/*'))

radar = xd.io.open_cfradial1_datatree(files[-6], first_dim="auto")

radar = radar.xradar.georeference()

display(radar)

<xarray.DatasetView> Size: 740B

Dimensions: (sweep: 15)

Dimensions without coordinates: sweep

Data variables:

sweep_group_name (sweep) <U10 600B 'sweep_0.0' ... 'sweep_14.0'

sweep_fixed_angle (sweep) float32 60B ...

latitude float32 4B ...

longitude float32 4B ...

altitude float32 4B ...

time_coverage_start |S32 32B ...

time_coverage_end |S32 32B ...

volume_number int32 4B ...

Attributes:

version: 2.0 lite

history: created by zsherman on or-condo-c215.ornl.gov at 2020-10-01...

references: See CSAPR2 Instrument Handbook

institution: United States Department of Energy - Atmospheric Radiation ...

source: Atmospheric Radiation Measurement (ARM) program C-band Scan...

Conventions: CF/Radial instrument_parameters ARM-1.3

comment: This is highly experimental and initial data. There are man...<xarray.DatasetView> Size: 0B Dimensions: () Data variables: *empty*radar_parameters<xarray.DatasetView> Size: 0B Dimensions: () Data variables: *empty*georeferencing_correction- azimuth: 361

- range: 1100

- time(azimuth)datetime64[ns]2019-01-29T18:30:15.709000 ... 2...

- long_name :

- Time offset from midnight

- standard_name :

- time

array(['2019-01-29T18:30:15.709000000', '2019-01-29T18:30:15.779999000', '2019-01-29T18:30:15.850999999', ..., '2019-01-29T18:30:15.516000000', '2019-01-29T18:30:15.577000000', '2019-01-29T18:30:15.648000000'], dtype='datetime64[ns]') - range(range)float320.0 100.0 ... 1.098e+05 1.099e+05

- long_name :

- Range to measurement volume

- units :

- m

- meters_between_gates :

- 100.0

- meters_to_center_of_first_gate :

- 50.0

- spacing_is_constant :

- True

- standard_name :

- projection_range_coordinate

- axis :

- radial_range_coordinate

array([0.000e+00, 1.000e+02, 2.000e+02, ..., 1.097e+05, 1.098e+05, 1.099e+05], dtype=float32) - elevation(azimuth)float320.4834 0.4834 ... 0.4834 0.4834

- long_name :

- Elevation angle from horizontal plane

- units :

- degree

- standard_name :

- sensor_to_target_elevation_angle

- axis :

- radial_elevation_coordinate

array([0.483398, 0.483398, 0.483398, ..., 0.483398, 0.483398, 0.483398], dtype=float32) - azimuth(azimuth)float320.5273 1.593 2.593 ... 358.5 359.5

- long_name :

- Azimuth angle from true north

- units :

- degree

- standard_name :

- sensor_to_target_azimuth_angle

- axis :

- radial_azimuth_coordinate

array([ 0.527344, 1.593018, 2.592773, ..., 357.5583 , 358.53607 , 359.53583 ], dtype=float32) - latitude()float32-32.13

- long_name :

- Latitude

- units :

- degree_N

- standard_name :

- latitude

- valid_min :

- -90.0

- valid_max :

- 90.0

array(-32.12641, dtype=float32)

- longitude()float32-64.73

- long_name :

- Longitude

- units :

- degree_E

- standard_name :

- longitude

- valid_min :

- -180.0

- valid_max :

- 180.0

array(-64.72837, dtype=float32)

- altitude()float321.141e+03

- long_name :

- Altitude

- units :

- m

- standard_name :

- altitude

array(1141., dtype=float32)

- crs_wkt()int640

- crs_wkt :

- PROJCRS["unknown",BASEGEOGCRS["unknown",DATUM["World Geodetic System 1984",ELLIPSOID["WGS 84",6378137,298.257223563,LENGTHUNIT["metre",1]],ID["EPSG",6326]],PRIMEM["Greenwich",0,ANGLEUNIT["degree",0.0174532925199433],ID["EPSG",8901]]],CONVERSION["unknown",METHOD["Azimuthal Equidistant",ID["EPSG",1125]],PARAMETER["Latitude of natural origin",-32.1264114379883,ANGLEUNIT["degree",0.0174532925199433],ID["EPSG",8801]],PARAMETER["Longitude of natural origin",-64.7283706665039,ANGLEUNIT["degree",0.0174532925199433],ID["EPSG",8802]],PARAMETER["False easting",0,LENGTHUNIT["metre",1],ID["EPSG",8806]],PARAMETER["False northing",0,LENGTHUNIT["metre",1],ID["EPSG",8807]]],CS[Cartesian,2],AXIS["(E)",east,ORDER[1],LENGTHUNIT["metre",1,ID["EPSG",9001]]],AXIS["(N)",north,ORDER[2],LENGTHUNIT["metre",1,ID["EPSG",9001]]]]

- semi_major_axis :

- 6378137.0

- semi_minor_axis :

- 6356752.314245179

- inverse_flattening :

- 298.257223563

- reference_ellipsoid_name :

- WGS 84

- longitude_of_prime_meridian :

- 0.0

- prime_meridian_name :

- Greenwich

- geographic_crs_name :

- unknown

- horizontal_datum_name :

- World Geodetic System 1984

- projected_crs_name :

- unknown

- grid_mapping_name :

- azimuthal_equidistant

- latitude_of_projection_origin :

- -32.12641143798828

- longitude_of_projection_origin :

- -64.7283706665039

- false_easting :

- 0.0

- false_northing :

- 0.0

array(0)

- x(azimuth, range)float320.0 0.9202 1.84 ... -889.2 -890.0

- standard_name :

- east_west_distance_from_radar

- units :

- meters

array([[ 0.0000000e+00, 9.2021906e-01, 1.8404381e+00, ..., 1.0093144e+03, 1.0102344e+03, 1.0111542e+03], [ 0.0000000e+00, 2.7795098e+00, 5.5590196e+00, ..., 3.0486211e+03, 3.0513997e+03, 3.0541780e+03], [ 0.0000000e+00, 4.5229301e+00, 9.0458603e+00, ..., 4.9608394e+03, 4.9653608e+03, 4.9698818e+03], ..., [-0.0000000e+00, -4.2595582e+00, -8.5191164e+00, ..., -4.6719673e+03, -4.6762256e+03, -4.6804829e+03], [-0.0000000e+00, -2.5543070e+00, -5.1086140e+00, ..., -2.8016143e+03, -2.8041680e+03, -2.8067209e+03], [-0.0000000e+00, -8.0998236e-01, -1.6199647e+00, ..., -8.8840460e+02, -8.8921436e+02, -8.9002393e+02]], dtype=float32) - y(azimuth, range)float320.0 99.98 ... 1.098e+05 1.099e+05

- standard_name :

- north_south_distance_from_radar

- units :

- meters

array([[0.00000000e+00, 9.99787750e+01, 1.99957550e+02, ..., 1.09658688e+05, 1.09758641e+05, 1.09858570e+05], [0.00000000e+00, 9.99443665e+01, 1.99888733e+02, ..., 1.09620953e+05, 1.09720867e+05, 1.09820766e+05], [0.00000000e+00, 9.98806534e+01, 1.99761307e+02, ..., 1.09551070e+05, 1.09650922e+05, 1.09750758e+05], ..., [0.00000000e+00, 9.98922348e+01, 1.99784470e+02, ..., 1.09563773e+05, 1.09663633e+05, 1.09763484e+05], [0.00000000e+00, 9.99503784e+01, 1.99900757e+02, ..., 1.09627539e+05, 1.09727461e+05, 1.09827367e+05], [0.00000000e+00, 9.99797287e+01, 1.99959457e+02, ..., 1.09659734e+05, 1.09759688e+05, 1.09859617e+05]], dtype=float32) - z(azimuth, range)float321.141e+03 1.142e+03 ... 2.778e+03

- standard_name :

- height_above_ground

- units :

- meters

array([[1141., 1142., 1142., ..., 2774., 2776., 2778.], [1141., 1142., 1142., ..., 2774., 2776., 2778.], [1141., 1142., 1142., ..., 2774., 2776., 2778.], ..., [1141., 1142., 1142., ..., 2774., 2776., 2778.], [1141., 1142., 1142., ..., 2774., 2776., 2778.], [1141., 1142., 1142., ..., 2774., 2776., 2778.]], dtype=float32)

- attenuation_corrected_differential_reflectivity(azimuth, range)float32...

- long_name :

- Rainfall attenuation-corrected differential reflectivity

- units :

- dB

- standard_name :

- radar_differential_reflectivity_hv

- applied_bias_correction :

- 3.7371335

[397100 values with dtype=float32]

- attenuation_corrected_differential_reflectivity_lag_1(azimuth, range)float32...

- long_name :

- Differential reflectivity estimated at lag 1 corrected for rainfall attenuation.

- units :

- dB

- standard_name :

- radar_differential_reflectivity_hv

- applied_bias_correction :

- 3.73713

[397100 values with dtype=float32]

- attenuation_corrected_reflectivity_h(azimuth, range)float32...

- long_name :

- Rainfall attenuation-corrected reflectivity, horizontal channel

- units :

- dBZ

- standard_name :

- equivalent_reflectivity_factor

- applied_bias_correction :

- 1.8

[397100 values with dtype=float32]

- censor_mask(azimuth, range)int32...

- long_name :

- Censor Mask

- units :

- 1

- flag_masks :

- [ 1 2 4 8 16 32 64 128 256 512 1024 2048]

- flag_meanings :

- horizontal_snr_below_noise_threshold vertical_snr_below_noise_threshold horizontal_ccor_below_ccor_threshold vertical_ccor_below_ccor_threshold horizontal_sqi_below_sqi1_threshold vertical_sqi_below_sqi1_threshold horizontal_sqi_below_sqi2_threshold vertical_sqi_below_sqi2_threshold horizontal_sigpow_below_sigpow_threshold vertical_sigpow_below_sigpow_threshold urhohv_below_rhohv_threshold censored_by_clutter_micro_suppression

- standard_name :

- radar_quality_mask

[397100 values with dtype=int32]

- classification_mask(azimuth, range)int32...

- long_name :

- Classification Mask

- units :

- 1

- flag_masks :

- [ 1 2 4 8 16]

- flag_meanings :

- second_trip third_trip interference clutter sunspoke

- standard_name :

- radar_quality_mask

[397100 values with dtype=int32]

- copol_correlation_coeff(azimuth, range)float32...

- long_name :

- Copolar correlation coefficient (also known as rhohv)

- units :

- 1

- standard_name :

- radar_correlation_coefficient_hv

[397100 values with dtype=float32]

- differential_phase(azimuth, range)float32...

- long_name :

- Differential propagation phase shift

- units :

- degree

- standard_name :

- radar_differential_phase_hv

[397100 values with dtype=float32]

- differential_reflectivity(azimuth, range)float32...

- long_name :

- Differential reflectivity

- units :

- dB

- standard_name :

- radar_differential_reflectivity_hv

- applied_bias_correction :

- 3.7371335

[397100 values with dtype=float32]

- differential_reflectivity_lag_1(azimuth, range)float32...

- long_name :

- Differential reflectivity estimated at lag 1

- units :

- dB

- standard_name :

- radar_differential_reflectivity_hv

- applied_bias_correction :

- 3.7371335

[397100 values with dtype=float32]

- mean_doppler_velocity(azimuth, range)float32...

- long_name :

- Radial mean Doppler velocity, positive for motion away from the instrument

- units :

- m/s

- standard_name :

- radial_velocity_of_scatterers_away_from_instrument

[397100 values with dtype=float32]

- mean_doppler_velocity_v(azimuth, range)float32...

- long_name :

- Doppler velocity, vertical channel

- units :

- m/s

- standard_name :

- radial_velocity_of_scatterers_away_from_instrument

[397100 values with dtype=float32]

- normalized_coherent_power(azimuth, range)float32...

- long_name :

- Normalized coherent power, also known as SQI.

- units :

- 1

- standard_name :

- radar_normalized_coherent_power

[397100 values with dtype=float32]

- normalized_coherent_power_v(azimuth, range)float32...

- long_name :

- Normalized coherent power, also known as SQI, Vertical Channel

- units :

- 1

- standard_name :

- radar_normalized_coherent_power

[397100 values with dtype=float32]

- reflectivity(azimuth, range)float32...

- long_name :

- Equivalent reflectivity factor

- units :

- dBZ

- standard_name :

- equivalent_reflectivity_factor

- applied_bias_correction :

- 1.8

[397100 values with dtype=float32]

- reflectivity_v(azimuth, range)float32...

- long_name :

- Equivalent reflectivity factor, vertical channel

- units :

- dBZ

- standard_name :

- equivalent_reflectivity_factor

[397100 values with dtype=float32]

- signal_to_noise_ratio_copolar_h(azimuth, range)float32...

- long_name :

- Signal-to-noise ratio, horizontal channel

- units :

- dB

- standard_name :

- radar_signal_to_noise_ratio_copolar_h

[397100 values with dtype=float32]

- signal_to_noise_ratio_copolar_v(azimuth, range)float32...

- long_name :

- Signal-to-noise ratio, vertical channel

- units :

- dB

- standard_name :

- radar_signal_to_noise_ratio_copolar_v

[397100 values with dtype=float32]

- specific_attenuation(azimuth, range)float64...

- long_name :

- Specific attenuation

- units :

- dB/km

- standard_name :

- specific_attenuation

- valid_min :

- 0.0

- valid_max :

- 1.0

[397100 values with dtype=float64]

- specific_differential_attenuation(azimuth, range)float64...

- long_name :

- Specific Differential Attenuation

- units :

- dB/km

[397100 values with dtype=float64]

- specific_differential_phase(azimuth, range)float32...

- long_name :

- Specific differential phase (KDP)

- units :

- degree/km

- standard_name :

- radar_specific_differential_phase_hv

[397100 values with dtype=float32]

- spectral_width(azimuth, range)float32...

- long_name :

- Spectral width

- units :

- m/s

- standard_name :

- radar_doppler_spectrum_width

[397100 values with dtype=float32]

- spectral_width_v(azimuth, range)float32...

- long_name :

- Spectral Width, Vertical Channel

- units :

- m/s

- standard_name :

- radar_doppler_spectrum_width

[397100 values with dtype=float32]

- uncorrected_copol_correlation_coeff(azimuth, range)float32...

- long_name :

- Uncorrected copolar correlation coefficient

- units :

- 1

- standard_name :

- radar_correlation_coefficient_hv

[397100 values with dtype=float32]

- uncorrected_differential_phase(azimuth, range)float32...

- long_name :

- Uncorrected Differential Phase

- units :

- degree

- standard_name :

- radar_differential_phase_hv

[397100 values with dtype=float32]

- uncorrected_differential_reflectivity(azimuth, range)float32...

- long_name :

- Uncorrected Differential Reflectivity, Vertical Channel

- units :

- dBZ

- standard_name :

- radar_differential_reflectivity_hv

[397100 values with dtype=float32]

- uncorrected_differential_reflectivity_lag_1(azimuth, range)float32...

- long_name :

- Uncorrected differential reflectivity estimated at lag 1

- units :

- dB

- standard_name :

- radar_differential_reflectivity_hv

[397100 values with dtype=float32]

- uncorrected_mean_doppler_velocity_h(azimuth, range)float32...

- long_name :

- Radial mean Doppler velocity from horizontal channel, positive for motion away from the instrument, uncorrected

- units :

- m/s

- standard_name :

- radial_velocity_of_scatterers_away_from_instrument

[397100 values with dtype=float32]

- uncorrected_mean_doppler_velocity_v(azimuth, range)float32...

- long_name :

- Radial mean Doppler velocity from vertical channel, positive for motion away from the instrument, uncorrected

- units :

- m/s

- standard_name :

- radial_velocity_of_scatterers_away_from_instrument

[397100 values with dtype=float32]

- uncorrected_reflectivity_h(azimuth, range)float32...

- long_name :

- Uncorrected Reflectivity, Horizontal Channel

- units :

- dBZ

- standard_name :

- equivalent_reflectivity_factor

- applied_bias_correction :

- 1.8

[397100 values with dtype=float32]

- uncorrected_reflectivity_v(azimuth, range)float32...

- long_name :

- Uncorrected Reflectivity, Vertical Channel

- units :

- dBZ

- standard_name :

- equivalent_reflectivity_factor

[397100 values with dtype=float32]

- uncorrected_spectral_width_h(azimuth, range)float32...

- long_name :

- Uncorrected Spectral Width, Horizontal Channel

- units :

- m/s

- standard_name :

- radar_doppler_spectrum_width

[397100 values with dtype=float32]

- uncorrected_spectral_width_v(azimuth, range)float32...

- long_name :

- Uncorrected Spectral Width, Vertical Channel

- units :

- m/s

- standard_name :

- radar_doppler_spectrum_width

[397100 values with dtype=float32]

- unthresholded_power_copolar_h(azimuth, range)float32...

- long_name :

- Received copolar power, h channel, without thresholding.

- units :

- dBm

- standard_name :

- radar_received_signal_power_copolar_h

[397100 values with dtype=float32]

- unthresholded_power_copolar_v(azimuth, range)float32...

- long_name :

- Received copolar power, v channel, without thresholding

- units :

- dBm

- standard_name :

- radar_received_signal_power_copolar_v

[397100 values with dtype=float32]

- ground_clutter(azimuth, range)int64...

- long_name :

- Clutter mask

- standard_name :

- clutter_mask

- valid_min :

- 0

- valid_max :

- 1

- units :

- 1

- flag_values :

- [0 1]

- flag_meanings :

- no_clutter clutter

[397100 values with dtype=int64]

- clutter_masked_velocity(azimuth, range)float32...

- units :

- m/s

- standard_name :

- radial_velocity_of_scatterers_away_from_instrument

- long_name :

- Radial mean Doppler velocity, positive for motion away from the instrument, clutter removed

[397100 values with dtype=float32]

- sounding_temperature(azimuth, range)float32...

- long_name :

- Interpolated profile

- standard_name :

- interpolated_profile

- units :

- deg_C

[397100 values with dtype=float32]

- height(azimuth, range)float32...

- long_name :

- Height of radar beam

- standard_name :

- height

- units :

- m

[397100 values with dtype=float32]

- signal_to_noise_ratio(azimuth, range)float32...

- long_name :

- Signal to noise ratio

- units :

- dB

- standard_name :

- signal_to_noise_ratio

[397100 values with dtype=float32]

- velocity_texture(azimuth, range)float64...

- long_name :

- Mean dopper velocity

- standard_name :

- radial_velocity_of_scatterers_away_from_instrument

- units :

- m/s

[397100 values with dtype=float64]

- gate_id(azimuth, range)int64...

- long_name :

- Classification of dominant scatterer

- units :

- 1

- valid_max :

- 6

- valid_min :

- 0

- flag_values :

- [0 1 2 3 4 5 6]

- flag_meanings :

- multi_trip rain snow no_scatter melting clutter terrain_blockage

[397100 values with dtype=int64]

- partial_beam_blockage(azimuth, range)float64...

- long_name :

- Partial Beam Block Fraction

- units :

- unitless

- standard_name :

- partial_beam_block

- comment :

- Partial beam block fraction due to terrain.

[397100 values with dtype=float64]

- cumulative_beam_blockage(azimuth, range)float64...

- long_name :

- Cumulative Beam Block Fraction

- units :

- unitless

- standard_name :

- cumulative_beam_block

- comment :

- Cumulative beam block fraction due to terrain.

[397100 values with dtype=float64]

- simulated_velocity(azimuth, range)float64...

- long_name :

- Simulated mean doppler velocity

- standard_name :

- radial_velocity_of_scatterers_away_from_instrument

- units :

- m/s

[397100 values with dtype=float64]

- corrected_velocity(azimuth, range)float32...

- long_name :

- Corrected mean doppler velocity

- standard_name :

- corrected_radial_velocity_of_scatterers_away_from_instrument

- valid_min :

- -82.46

- valid_max :

- 82.46

- units :

- m/s

[397100 values with dtype=float32]

- unfolded_differential_phase(azimuth, range)float64...

- units :

- degree

- standard_name :

- radar_differential_phase_hv

- long_name :

- Unfolded Differential Phase

[397100 values with dtype=float64]

- corrected_differential_phase(azimuth, range)float64...

- long_name :

- Uncorrected Differential Phase

- units :

- degree

- standard_name :

- radar_differential_phase_hv

- valid_min :

- 0.0

- valid_max :

- 400.0

[397100 values with dtype=float64]

- filtered_corrected_differential_phase(azimuth, range)float64...

- units :

- degree

- standard_name :

- radar_differential_phase_hv

- valid_min :

- 0.0

- valid_max :

- 400.0

- long_name :

- Filtered Corrected Differential Phase

[397100 values with dtype=float64]

- corrected_specific_diff_phase(azimuth, range)float64...

- long_name :

- Specific differential phase (KDP)

- units :

- degree/km

- standard_name :

- radar_specific_differential_phase_hv

[397100 values with dtype=float64]

- filtered_corrected_specific_diff_phase(azimuth, range)float64...

- units :

- degree/km

- standard_name :

- radar_specific_differential_phase_hv

- long_name :

- Filtered Corrected Specific differential phase (KDP)

[397100 values with dtype=float64]

- corrected_differential_reflectivity(azimuth, range)float64...

- long_name :

- Corrected differential reflectivity

- units :

- dB

- standard_name :

- corrected_log_differential_reflectivity_hv

[397100 values with dtype=float64]

- corrected_reflectivity(azimuth, range)float64...

- long_name :

- Corrected reflectivity

- units :

- dBZ

- standard_name :

- corrected_equivalent_reflectivity_factor

[397100 values with dtype=float64]

- height_over_iso0(azimuth, range)float32...

- standard_name :

- height

- long_name :

- Height of radar beam over freezing level

- units :

- m

[397100 values with dtype=float32]

- path_integrated_attenuation(azimuth, range)float64...

- units :

- dB

- long_name :

- Path Integrated Attenuation

[397100 values with dtype=float64]

- path_integrated_differential_attenuation(azimuth, range)float64...

- units :

- dB

- long_name :

- Path Integrated Differential Attenuation

[397100 values with dtype=float64]

- rain_rate_A(azimuth, range)float64...

- long_name :

- rainfall_rate

- units :

- mm/hr

- standard_name :

- rainfall_rate

- valid_min :

- 0.0

- valid_max :

- 400.0

- comment :

- Rain rate calculated from specific_attenuation, R=51.3*specific_attenuation**0.81, note R=0.0 where norm coherent power < 0.4 or rhohv < 0.8

[397100 values with dtype=float64]

- sweep_number()float64...

- long_name :

- Sweep index number 0 based

- units :

- 1

[1 values with dtype=float64]

- sweep_fixed_angle()float32...

- long_name :

- Ray target fixed angle

- units :

- degree

[1 values with dtype=float32]

- sweep_mode()<U6'sector'

array('sector', dtype='<U6') - prt(azimuth)float32...

- long_name :

- Pulse repetition time

- units :

- s

[361 values with dtype=float32]

- nyquist_velocity(azimuth)float32...

- long_name :

- Unambiguous doppler velocity

- units :

- m/s

- meta_group :

- instrument_parameters

[361 values with dtype=float32]

<xarray.DatasetView> Size: 122MB Dimensions: (azimuth: 361, range: 1100) Coordinates: time (azimuth) datetime64[ns] 3kB ... * range (range) float32 4kB ... elevation (azimuth) float32 1kB ... * azimuth (azimuth) float32 1kB ... latitude float32 4B -32.13 longitude float32 4B -64.73 altitude float32 4B 1.141e+03 crs_wkt int64 8B 0 x (azimuth, range) float32 2MB ... y (azimuth, range) float32 2MB ... z (azimuth, range) float32 2MB ... Data variables: (12/61) attenuation_corrected_differential_reflectivity (azimuth, range) float32 2MB ... attenuation_corrected_differential_reflectivity_lag_1 (azimuth, range) float32 2MB ... attenuation_corrected_reflectivity_h (azimuth, range) float32 2MB ... censor_mask (azimuth, range) int32 2MB ... classification_mask (azimuth, range) int32 2MB ... copol_correlation_coeff (azimuth, range) float32 2MB ... ... ... rain_rate_A (azimuth, range) float64 3MB ... sweep_number float64 8B ... sweep_fixed_angle float32 4B ... sweep_mode <U6 24B 'sector' prt (azimuth) float32 1kB ... nyquist_velocity (azimuth) float32 1kB ...sweep_0- azimuth: 361

- range: 1100

- time(azimuth)datetime64[ns]2019-01-29T18:30:39.696000 ... 2...

- long_name :

- Time offset from midnight

- standard_name :

- time

array(['2019-01-29T18:30:39.696000000', '2019-01-29T18:30:39.768000000', '2019-01-29T18:30:39.833000000', ..., '2019-01-29T18:30:39.500000000', '2019-01-29T18:30:39.562000000', '2019-01-29T18:30:39.634999000'], dtype='datetime64[ns]') - range(range)float320.0 100.0 ... 1.098e+05 1.099e+05

- long_name :

- Range to measurement volume

- units :

- m

- meters_between_gates :

- 100.0

- meters_to_center_of_first_gate :

- 50.0

- spacing_is_constant :

- True

- standard_name :

- projection_range_coordinate

- axis :

- radial_range_coordinate

array([0.000e+00, 1.000e+02, 2.000e+02, ..., 1.097e+05, 1.098e+05, 1.099e+05], dtype=float32) - elevation(azimuth)float321.494 1.494 1.494 ... 1.494 1.494

- long_name :

- Elevation angle from horizontal plane

- units :

- degree

- standard_name :

- sensor_to_target_elevation_angle

- axis :

- radial_elevation_coordinate

array([1.494141, 1.494141, 1.494141, ..., 1.494141, 1.494141, 1.494141], dtype=float32) - azimuth(azimuth)float320.5685 1.588 2.568 ... 358.6 359.6

- long_name :

- Azimuth angle from true north

- units :

- degree

- standard_name :

- sensor_to_target_azimuth_angle

- axis :

- radial_azimuth_coordinate

array([ 0.568542, 1.587524, 2.568054, ..., 357.5583 , 358.56903 , 359.57977 ], dtype=float32) - latitude()float32-32.13

- long_name :

- Latitude

- units :

- degree_N

- standard_name :

- latitude

- valid_min :

- -90.0

- valid_max :

- 90.0

array(-32.12641, dtype=float32)

- longitude()float32-64.73

- long_name :

- Longitude

- units :

- degree_E

- standard_name :

- longitude

- valid_min :

- -180.0

- valid_max :

- 180.0

array(-64.72837, dtype=float32)

- altitude()float321.141e+03

- long_name :

- Altitude

- units :

- m

- standard_name :

- altitude

array(1141., dtype=float32)

- crs_wkt()int640

- crs_wkt :

- PROJCRS["unknown",BASEGEOGCRS["unknown",DATUM["World Geodetic System 1984",ELLIPSOID["WGS 84",6378137,298.257223563,LENGTHUNIT["metre",1]],ID["EPSG",6326]],PRIMEM["Greenwich",0,ANGLEUNIT["degree",0.0174532925199433],ID["EPSG",8901]]],CONVERSION["unknown",METHOD["Azimuthal Equidistant",ID["EPSG",1125]],PARAMETER["Latitude of natural origin",-32.1264114379883,ANGLEUNIT["degree",0.0174532925199433],ID["EPSG",8801]],PARAMETER["Longitude of natural origin",-64.7283706665039,ANGLEUNIT["degree",0.0174532925199433],ID["EPSG",8802]],PARAMETER["False easting",0,LENGTHUNIT["metre",1],ID["EPSG",8806]],PARAMETER["False northing",0,LENGTHUNIT["metre",1],ID["EPSG",8807]]],CS[Cartesian,2],AXIS["(E)",east,ORDER[1],LENGTHUNIT["metre",1,ID["EPSG",9001]]],AXIS["(N)",north,ORDER[2],LENGTHUNIT["metre",1,ID["EPSG",9001]]]]

- semi_major_axis :

- 6378137.0

- semi_minor_axis :

- 6356752.314245179

- inverse_flattening :

- 298.257223563

- reference_ellipsoid_name :

- WGS 84

- longitude_of_prime_meridian :

- 0.0

- prime_meridian_name :

- Greenwich

- geographic_crs_name :

- unknown

- horizontal_datum_name :

- World Geodetic System 1984

- projected_crs_name :

- unknown

- grid_mapping_name :

- azimuthal_equidistant

- latitude_of_projection_origin :

- -32.12641143798828

- longitude_of_projection_origin :

- -64.7283706665039

- false_easting :

- 0.0

- false_northing :

- 0.0

array(0)

- x(azimuth, range)float320.0 0.9918 1.984 ... -804.6 -805.4

- standard_name :

- east_west_distance_from_radar

- units :

- meters

array([[ 0.0000000e+00, 9.9180675e-01, 1.9836129e+00, ..., 1.0875859e+03, 1.0885768e+03, 1.0895677e+03], [ 0.0000000e+00, 2.7690840e+00, 5.5381660e+00, ..., 3.0364954e+03, 3.0392620e+03, 3.0420288e+03], [ 0.0000000e+00, 4.4784737e+00, 8.9569445e+00, ..., 4.9109614e+03, 4.9154360e+03, 4.9199106e+03], ..., [-0.0000000e+00, -4.2582603e+00, -8.5165186e+00, ..., -4.6694824e+03, -4.6737368e+03, -4.6779917e+03], [-0.0000000e+00, -2.4960680e+00, -4.9921346e+00, ..., -2.7371143e+03, -2.7396082e+03, -2.7421021e+03], [-0.0000000e+00, -7.3309886e-01, -1.4661974e+00, ..., -8.0389453e+02, -8.0462701e+02, -8.0535944e+02]], dtype=float32) - y(azimuth, range)float320.0 99.95 ... 1.097e+05 1.098e+05

- standard_name :

- north_south_distance_from_radar

- units :

- meters

array([[0.00000000e+00, 9.99476318e+01, 1.99895203e+02, ..., 1.09599609e+05, 1.09699477e+05, 1.09799336e+05], [0.00000000e+00, 9.99141846e+01, 1.99828308e+02, ..., 1.09562938e+05, 1.09662766e+05, 1.09762594e+05], [0.00000000e+00, 9.98521729e+01, 1.99704285e+02, ..., 1.09494938e+05, 1.09594703e+05, 1.09694469e+05], ..., [0.00000000e+00, 9.98618088e+01, 1.99723557e+02, ..., 1.09505500e+05, 1.09605273e+05, 1.09705047e+05], [0.00000000e+00, 9.99213791e+01, 1.99842697e+02, ..., 1.09570828e+05, 1.09670664e+05, 1.09770500e+05], [0.00000000e+00, 9.99498672e+01, 1.99899673e+02, ..., 1.09602062e+05, 1.09701930e+05, 1.09801789e+05]], dtype=float32) - z(azimuth, range)float321.141e+03 1.143e+03 ... 4.716e+03

- standard_name :

- height_above_ground

- units :

- meters

array([[1141., 1143., 1146., ..., 4708., 4712., 4716.], [1141., 1143., 1146., ..., 4708., 4712., 4716.], [1141., 1143., 1146., ..., 4708., 4712., 4716.], ..., [1141., 1143., 1146., ..., 4708., 4712., 4716.], [1141., 1143., 1146., ..., 4708., 4712., 4716.], [1141., 1143., 1146., ..., 4708., 4712., 4716.]], dtype=float32)

- attenuation_corrected_differential_reflectivity(azimuth, range)float32...

- long_name :

- Rainfall attenuation-corrected differential reflectivity

- units :

- dB

- standard_name :

- radar_differential_reflectivity_hv

- applied_bias_correction :

- 3.7371335

[397100 values with dtype=float32]

- attenuation_corrected_differential_reflectivity_lag_1(azimuth, range)float32...

- long_name :

- Differential reflectivity estimated at lag 1 corrected for rainfall attenuation.

- units :

- dB

- standard_name :

- radar_differential_reflectivity_hv

- applied_bias_correction :

- 3.73713

[397100 values with dtype=float32]

- attenuation_corrected_reflectivity_h(azimuth, range)float32...

- long_name :

- Rainfall attenuation-corrected reflectivity, horizontal channel

- units :

- dBZ

- standard_name :

- equivalent_reflectivity_factor

- applied_bias_correction :

- 1.8

[397100 values with dtype=float32]

- censor_mask(azimuth, range)int32...

- long_name :

- Censor Mask

- units :

- 1

- flag_masks :

- [ 1 2 4 8 16 32 64 128 256 512 1024 2048]

- flag_meanings :

- horizontal_snr_below_noise_threshold vertical_snr_below_noise_threshold horizontal_ccor_below_ccor_threshold vertical_ccor_below_ccor_threshold horizontal_sqi_below_sqi1_threshold vertical_sqi_below_sqi1_threshold horizontal_sqi_below_sqi2_threshold vertical_sqi_below_sqi2_threshold horizontal_sigpow_below_sigpow_threshold vertical_sigpow_below_sigpow_threshold urhohv_below_rhohv_threshold censored_by_clutter_micro_suppression

- standard_name :

- radar_quality_mask

[397100 values with dtype=int32]

- classification_mask(azimuth, range)int32...

- long_name :

- Classification Mask

- units :

- 1

- flag_masks :

- [ 1 2 4 8 16]

- flag_meanings :

- second_trip third_trip interference clutter sunspoke

- standard_name :

- radar_quality_mask

[397100 values with dtype=int32]

- copol_correlation_coeff(azimuth, range)float32...

- long_name :

- Copolar correlation coefficient (also known as rhohv)

- units :

- 1

- standard_name :

- radar_correlation_coefficient_hv

[397100 values with dtype=float32]

- differential_phase(azimuth, range)float32...

- long_name :

- Differential propagation phase shift

- units :

- degree

- standard_name :

- radar_differential_phase_hv

[397100 values with dtype=float32]

- differential_reflectivity(azimuth, range)float32...

- long_name :

- Differential reflectivity

- units :

- dB

- standard_name :

- radar_differential_reflectivity_hv

- applied_bias_correction :

- 3.7371335

[397100 values with dtype=float32]

- differential_reflectivity_lag_1(azimuth, range)float32...

- long_name :

- Differential reflectivity estimated at lag 1

- units :

- dB

- standard_name :

- radar_differential_reflectivity_hv

- applied_bias_correction :

- 3.7371335

[397100 values with dtype=float32]

- mean_doppler_velocity(azimuth, range)float32...

- long_name :

- Radial mean Doppler velocity, positive for motion away from the instrument

- units :

- m/s

- standard_name :

- radial_velocity_of_scatterers_away_from_instrument

[397100 values with dtype=float32]

- mean_doppler_velocity_v(azimuth, range)float32...

- long_name :

- Doppler velocity, vertical channel

- units :

- m/s

- standard_name :

- radial_velocity_of_scatterers_away_from_instrument

[397100 values with dtype=float32]

- normalized_coherent_power(azimuth, range)float32...

- long_name :

- Normalized coherent power, also known as SQI.

- units :

- 1

- standard_name :

- radar_normalized_coherent_power

[397100 values with dtype=float32]

- normalized_coherent_power_v(azimuth, range)float32...

- long_name :

- Normalized coherent power, also known as SQI, Vertical Channel

- units :

- 1

- standard_name :

- radar_normalized_coherent_power

[397100 values with dtype=float32]

- reflectivity(azimuth, range)float32...

- long_name :

- Equivalent reflectivity factor

- units :

- dBZ

- standard_name :

- equivalent_reflectivity_factor

- applied_bias_correction :

- 1.8

[397100 values with dtype=float32]

- reflectivity_v(azimuth, range)float32...

- long_name :

- Equivalent reflectivity factor, vertical channel

- units :

- dBZ

- standard_name :

- equivalent_reflectivity_factor

[397100 values with dtype=float32]

- signal_to_noise_ratio_copolar_h(azimuth, range)float32...

- long_name :

- Signal-to-noise ratio, horizontal channel

- units :

- dB

- standard_name :

- radar_signal_to_noise_ratio_copolar_h

[397100 values with dtype=float32]

- signal_to_noise_ratio_copolar_v(azimuth, range)float32...

- long_name :

- Signal-to-noise ratio, vertical channel

- units :

- dB

- standard_name :

- radar_signal_to_noise_ratio_copolar_v

[397100 values with dtype=float32]

- specific_attenuation(azimuth, range)float64...

- long_name :

- Specific attenuation

- units :

- dB/km

- standard_name :

- specific_attenuation

- valid_min :

- 0.0

- valid_max :

- 1.0

[397100 values with dtype=float64]

- specific_differential_attenuation(azimuth, range)float64...

- long_name :

- Specific Differential Attenuation

- units :

- dB/km

[397100 values with dtype=float64]

- specific_differential_phase(azimuth, range)float32...

- long_name :

- Specific differential phase (KDP)

- units :

- degree/km

- standard_name :

- radar_specific_differential_phase_hv

[397100 values with dtype=float32]

- spectral_width(azimuth, range)float32...

- long_name :

- Spectral width

- units :

- m/s

- standard_name :

- radar_doppler_spectrum_width

[397100 values with dtype=float32]

- spectral_width_v(azimuth, range)float32...

- long_name :

- Spectral Width, Vertical Channel

- units :

- m/s

- standard_name :

- radar_doppler_spectrum_width

[397100 values with dtype=float32]

- uncorrected_copol_correlation_coeff(azimuth, range)float32...

- long_name :

- Uncorrected copolar correlation coefficient

- units :

- 1

- standard_name :

- radar_correlation_coefficient_hv

[397100 values with dtype=float32]

- uncorrected_differential_phase(azimuth, range)float32...

- long_name :

- Uncorrected Differential Phase

- units :

- degree

- standard_name :

- radar_differential_phase_hv

[397100 values with dtype=float32]

- uncorrected_differential_reflectivity(azimuth, range)float32...

- long_name :

- Uncorrected Differential Reflectivity, Vertical Channel

- units :

- dBZ

- standard_name :

- radar_differential_reflectivity_hv

[397100 values with dtype=float32]

- uncorrected_differential_reflectivity_lag_1(azimuth, range)float32...

- long_name :

- Uncorrected differential reflectivity estimated at lag 1

- units :

- dB

- standard_name :

- radar_differential_reflectivity_hv

[397100 values with dtype=float32]

- uncorrected_mean_doppler_velocity_h(azimuth, range)float32...

- long_name :

- Radial mean Doppler velocity from horizontal channel, positive for motion away from the instrument, uncorrected

- units :

- m/s

- standard_name :

- radial_velocity_of_scatterers_away_from_instrument

[397100 values with dtype=float32]

- uncorrected_mean_doppler_velocity_v(azimuth, range)float32...

- long_name :

- Radial mean Doppler velocity from vertical channel, positive for motion away from the instrument, uncorrected

- units :

- m/s

- standard_name :

- radial_velocity_of_scatterers_away_from_instrument

[397100 values with dtype=float32]

- uncorrected_reflectivity_h(azimuth, range)float32...

- long_name :

- Uncorrected Reflectivity, Horizontal Channel

- units :

- dBZ

- standard_name :

- equivalent_reflectivity_factor

- applied_bias_correction :

- 1.8

[397100 values with dtype=float32]

- uncorrected_reflectivity_v(azimuth, range)float32...

- long_name :

- Uncorrected Reflectivity, Vertical Channel

- units :

- dBZ

- standard_name :

- equivalent_reflectivity_factor

[397100 values with dtype=float32]

- uncorrected_spectral_width_h(azimuth, range)float32...

- long_name :

- Uncorrected Spectral Width, Horizontal Channel

- units :

- m/s

- standard_name :

- radar_doppler_spectrum_width

[397100 values with dtype=float32]

- uncorrected_spectral_width_v(azimuth, range)float32...

- long_name :

- Uncorrected Spectral Width, Vertical Channel

- units :

- m/s

- standard_name :

- radar_doppler_spectrum_width

[397100 values with dtype=float32]

- unthresholded_power_copolar_h(azimuth, range)float32...

- long_name :

- Received copolar power, h channel, without thresholding.

- units :

- dBm

- standard_name :

- radar_received_signal_power_copolar_h

[397100 values with dtype=float32]

- unthresholded_power_copolar_v(azimuth, range)float32...

- long_name :

- Received copolar power, v channel, without thresholding

- units :

- dBm

- standard_name :

- radar_received_signal_power_copolar_v

[397100 values with dtype=float32]

- ground_clutter(azimuth, range)int64...

- long_name :

- Clutter mask

- standard_name :

- clutter_mask

- valid_min :

- 0

- valid_max :

- 1

- units :

- 1

- flag_values :

- [0 1]

- flag_meanings :

- no_clutter clutter

[397100 values with dtype=int64]

- clutter_masked_velocity(azimuth, range)float32...

- units :

- m/s

- standard_name :

- radial_velocity_of_scatterers_away_from_instrument

- long_name :

- Radial mean Doppler velocity, positive for motion away from the instrument, clutter removed

[397100 values with dtype=float32]

- sounding_temperature(azimuth, range)float32...

- long_name :

- Interpolated profile

- standard_name :

- interpolated_profile

- units :

- deg_C

[397100 values with dtype=float32]

- height(azimuth, range)float32...

- long_name :

- Height of radar beam

- standard_name :

- height

- units :

- m

[397100 values with dtype=float32]

- signal_to_noise_ratio(azimuth, range)float32...

- long_name :

- Signal to noise ratio

- units :

- dB

- standard_name :

- signal_to_noise_ratio

[397100 values with dtype=float32]

- velocity_texture(azimuth, range)float64...

- long_name :

- Mean dopper velocity

- standard_name :

- radial_velocity_of_scatterers_away_from_instrument

- units :

- m/s

[397100 values with dtype=float64]

- gate_id(azimuth, range)int64...

- long_name :

- Classification of dominant scatterer

- units :

- 1

- valid_max :

- 6

- valid_min :

- 0

- flag_values :

- [0 1 2 3 4 5 6]

- flag_meanings :

- multi_trip rain snow no_scatter melting clutter terrain_blockage

[397100 values with dtype=int64]

- partial_beam_blockage(azimuth, range)float64...

- long_name :

- Partial Beam Block Fraction

- units :

- unitless

- standard_name :

- partial_beam_block

- comment :

- Partial beam block fraction due to terrain.

[397100 values with dtype=float64]

- cumulative_beam_blockage(azimuth, range)float64...

- long_name :

- Cumulative Beam Block Fraction

- units :

- unitless

- standard_name :

- cumulative_beam_block

- comment :

- Cumulative beam block fraction due to terrain.

[397100 values with dtype=float64]

- simulated_velocity(azimuth, range)float64...

- long_name :

- Simulated mean doppler velocity

- standard_name :

- radial_velocity_of_scatterers_away_from_instrument

- units :

- m/s

[397100 values with dtype=float64]

- corrected_velocity(azimuth, range)float32...

- long_name :

- Corrected mean doppler velocity

- standard_name :

- corrected_radial_velocity_of_scatterers_away_from_instrument

- valid_min :

- -82.46

- valid_max :

- 82.46

- units :

- m/s

[397100 values with dtype=float32]

- unfolded_differential_phase(azimuth, range)float64...

- units :

- degree

- standard_name :

- radar_differential_phase_hv

- long_name :

- Unfolded Differential Phase

[397100 values with dtype=float64]

- corrected_differential_phase(azimuth, range)float64...

- long_name :

- Uncorrected Differential Phase

- units :

- degree

- standard_name :

- radar_differential_phase_hv

- valid_min :

- 0.0

- valid_max :

- 400.0

[397100 values with dtype=float64]

- filtered_corrected_differential_phase(azimuth, range)float64...

- units :

- degree

- standard_name :

- radar_differential_phase_hv

- valid_min :

- 0.0

- valid_max :

- 400.0

- long_name :

- Filtered Corrected Differential Phase

[397100 values with dtype=float64]

- corrected_specific_diff_phase(azimuth, range)float64...

- long_name :

- Specific differential phase (KDP)

- units :

- degree/km

- standard_name :

- radar_specific_differential_phase_hv

[397100 values with dtype=float64]

- filtered_corrected_specific_diff_phase(azimuth, range)float64...

- units :

- degree/km

- standard_name :

- radar_specific_differential_phase_hv

- long_name :

- Filtered Corrected Specific differential phase (KDP)

[397100 values with dtype=float64]

- corrected_differential_reflectivity(azimuth, range)float64...

- long_name :

- Corrected differential reflectivity

- units :

- dB

- standard_name :

- corrected_log_differential_reflectivity_hv

[397100 values with dtype=float64]

- corrected_reflectivity(azimuth, range)float64...

- long_name :

- Corrected reflectivity

- units :

- dBZ

- standard_name :

- corrected_equivalent_reflectivity_factor

[397100 values with dtype=float64]

- height_over_iso0(azimuth, range)float32...

- standard_name :

- height

- long_name :

- Height of radar beam over freezing level

- units :

- m

[397100 values with dtype=float32]

- path_integrated_attenuation(azimuth, range)float64...

- units :

- dB

- long_name :

- Path Integrated Attenuation

[397100 values with dtype=float64]

- path_integrated_differential_attenuation(azimuth, range)float64...

- units :

- dB

- long_name :

- Path Integrated Differential Attenuation

[397100 values with dtype=float64]

- rain_rate_A(azimuth, range)float64...

- long_name :

- rainfall_rate

- units :

- mm/hr

- standard_name :

- rainfall_rate

- valid_min :

- 0.0

- valid_max :

- 400.0

- comment :

- Rain rate calculated from specific_attenuation, R=51.3*specific_attenuation**0.81, note R=0.0 where norm coherent power < 0.4 or rhohv < 0.8

[397100 values with dtype=float64]

- sweep_number()float64...

- long_name :

- Sweep index number 0 based

- units :

- 1

[1 values with dtype=float64]

- sweep_fixed_angle()float32...

- long_name :

- Ray target fixed angle

- units :

- degree

[1 values with dtype=float32]

- sweep_mode()<U6'sector'

array('sector', dtype='<U6') - prt(azimuth)float32...

- long_name :

- Pulse repetition time

- units :

- s

[361 values with dtype=float32]

- nyquist_velocity(azimuth)float32...

- long_name :

- Unambiguous doppler velocity

- units :

- m/s

- meta_group :

- instrument_parameters

[361 values with dtype=float32]

<xarray.DatasetView> Size: 122MB Dimensions: (azimuth: 361, range: 1100) Coordinates: time (azimuth) datetime64[ns] 3kB ... * range (range) float32 4kB ... elevation (azimuth) float32 1kB ... * azimuth (azimuth) float32 1kB ... latitude float32 4B -32.13 longitude float32 4B -64.73 altitude float32 4B 1.141e+03 crs_wkt int64 8B 0 x (azimuth, range) float32 2MB ... y (azimuth, range) float32 2MB ... z (azimuth, range) float32 2MB ... Data variables: (12/61) attenuation_corrected_differential_reflectivity (azimuth, range) float32 2MB ... attenuation_corrected_differential_reflectivity_lag_1 (azimuth, range) float32 2MB ... attenuation_corrected_reflectivity_h (azimuth, range) float32 2MB ... censor_mask (azimuth, range) int32 2MB ... classification_mask (azimuth, range) int32 2MB ... copol_correlation_coeff (azimuth, range) float32 2MB ... ... ... rain_rate_A (azimuth, range) float64 3MB ... sweep_number float64 8B ... sweep_fixed_angle float32 4B ... sweep_mode <U6 24B 'sector' prt (azimuth) float32 1kB ... nyquist_velocity (azimuth) float32 1kB ...sweep_1- azimuth: 361

- range: 1100

- time(azimuth)datetime64[ns]2019-01-29T18:31:03.685000 ... 2...

- long_name :

- Time offset from midnight

- standard_name :

- time

array(['2019-01-29T18:31:03.685000000', '2019-01-29T18:31:03.755000000', '2019-01-29T18:31:03.815999000', ..., '2019-01-29T18:31:03.482000000', '2019-01-29T18:31:03.552999000', '2019-01-29T18:31:03.614000000'], dtype='datetime64[ns]') - range(range)float320.0 100.0 ... 1.098e+05 1.099e+05

- long_name :

- Range to measurement volume

- units :

- m

- meters_between_gates :

- 100.0

- meters_to_center_of_first_gate :

- 50.0

- spacing_is_constant :

- True

- standard_name :

- projection_range_coordinate

- axis :

- radial_range_coordinate

array([0.000e+00, 1.000e+02, 2.000e+02, ..., 1.097e+05, 1.098e+05, 1.099e+05], dtype=float32) - elevation(azimuth)float322.593 2.593 2.593 ... 2.593 2.593

- long_name :

- Elevation angle from horizontal plane

- units :

- degree

- standard_name :

- sensor_to_target_elevation_angle

- axis :

- radial_elevation_coordinate

array([2.592773, 2.592773, 2.592773, ..., 2.592773, 2.592773, 2.592773], dtype=float32) - azimuth(azimuth)float320.6262 1.604 2.604 ... 358.6 359.6

- long_name :

- Azimuth angle from true north

- units :

- degree

- standard_name :

- sensor_to_target_azimuth_angle

- axis :

- radial_azimuth_coordinate

array([ 0.626221, 1.604004, 2.60376 , ..., 357.58026 , 358.55804 , 359.5578 ], dtype=float32) - latitude()float32-32.13

- long_name :

- Latitude

- units :

- degree_N

- standard_name :

- latitude

- valid_min :

- -90.0

- valid_max :

- 90.0

array(-32.12641, dtype=float32)

- longitude()float32-64.73

- long_name :

- Longitude

- units :

- degree_E

- standard_name :

- longitude

- valid_min :

- -180.0

- valid_max :

- 180.0

array(-64.72837, dtype=float32)

- altitude()float321.141e+03

- long_name :

- Altitude

- units :

- m

- standard_name :

- altitude

array(1141., dtype=float32)

- crs_wkt()int640

- crs_wkt :

- PROJCRS["unknown",BASEGEOGCRS["unknown",DATUM["World Geodetic System 1984",ELLIPSOID["WGS 84",6378137,298.257223563,LENGTHUNIT["metre",1]],ID["EPSG",6326]],PRIMEM["Greenwich",0,ANGLEUNIT["degree",0.0174532925199433],ID["EPSG",8901]]],CONVERSION["unknown",METHOD["Azimuthal Equidistant",ID["EPSG",1125]],PARAMETER["Latitude of natural origin",-32.1264114379883,ANGLEUNIT["degree",0.0174532925199433],ID["EPSG",8801]],PARAMETER["Longitude of natural origin",-64.7283706665039,ANGLEUNIT["degree",0.0174532925199433],ID["EPSG",8802]],PARAMETER["False easting",0,LENGTHUNIT["metre",1],ID["EPSG",8806]],PARAMETER["False northing",0,LENGTHUNIT["metre",1],ID["EPSG",8807]]],CS[Cartesian,2],AXIS["(E)",east,ORDER[1],LENGTHUNIT["metre",1,ID["EPSG",9001]]],AXIS["(N)",north,ORDER[2],LENGTHUNIT["metre",1,ID["EPSG",9001]]]]

- semi_major_axis :

- 6378137.0

- semi_minor_axis :

- 6356752.314245179

- inverse_flattening :

- 298.257223563

- reference_ellipsoid_name :

- WGS 84

- longitude_of_prime_meridian :

- 0.0

- prime_meridian_name :

- Greenwich

- geographic_crs_name :

- unknown

- horizontal_datum_name :

- World Geodetic System 1984

- projected_crs_name :

- unknown

- grid_mapping_name :

- azimuthal_equidistant

- latitude_of_projection_origin :

- -32.12641143798828

- longitude_of_projection_origin :

- -64.7283706665039

- false_easting :

- 0.0

- false_northing :

- 0.0

array(0)

- x(azimuth, range)float320.0 1.092 2.183 ... -845.9 -846.7

- standard_name :

- east_west_distance_from_radar

- units :

- meters

array([[ 0.0000000e+00, 1.0916736e+00, 2.1833458e+00, ..., 1.1968013e+03, 1.1978915e+03, 1.1989817e+03], [ 0.0000000e+00, 2.7959070e+00, 5.5918107e+00, ..., 3.0651516e+03, 3.0679438e+03, 3.0707358e+03], [ 0.0000000e+00, 4.5375919e+00, 9.0751791e+00, ..., 4.9745601e+03, 4.9790918e+03, 4.9836230e+03], ..., [-0.0000000e+00, -4.2170882e+00, -8.4341707e+00, ..., -4.6231919e+03, -4.6274033e+03, -4.6316147e+03], [-0.0000000e+00, -2.5135009e+00, -5.0269990e+00, ..., -2.7555498e+03, -2.7580603e+03, -2.7605701e+03], [-0.0000000e+00, -7.7088952e-01, -1.5417781e+00, ..., -8.4512579e+02, -8.4589575e+02, -8.4666553e+02]], dtype=float32) - y(azimuth, range)float320.0 99.88 ... 1.096e+05 1.097e+05

- standard_name :

- north_south_distance_from_radar

- units :

- meters

array([[0.00000000e+00, 9.98782043e+01, 1.99756287e+02, ..., 1.09496430e+05, 1.09596180e+05, 1.09695914e+05], [0.00000000e+00, 9.98450317e+01, 1.99689941e+02, ..., 1.09460062e+05, 1.09559781e+05, 1.09659484e+05], [0.00000000e+00, 9.97810516e+01, 1.99561981e+02, ..., 1.09389914e+05, 1.09489570e+05, 1.09589211e+05], ..., [0.00000000e+00, 9.97951126e+01, 1.99590103e+02, ..., 1.09405336e+05, 1.09505000e+05, 1.09604656e+05], [0.00000000e+00, 9.98525391e+01, 1.99704956e+02, ..., 1.09468289e+05, 1.09568016e+05, 1.09667727e+05], [0.00000000e+00, 9.98811951e+01, 1.99762268e+02, ..., 1.09499703e+05, 1.09599461e+05, 1.09699203e+05]], dtype=float32) - z(azimuth, range)float321.141e+03 1.145e+03 ... 6.821e+03

- standard_name :

- height_above_ground

- units :

- meters

array([[1141., 1145., 1150., ..., 6809., 6815., 6821.], [1141., 1145., 1150., ..., 6809., 6815., 6821.], [1141., 1145., 1150., ..., 6809., 6815., 6821.], ..., [1141., 1145., 1150., ..., 6809., 6815., 6821.], [1141., 1145., 1150., ..., 6809., 6815., 6821.], [1141., 1145., 1150., ..., 6809., 6815., 6821.]], dtype=float32)

- attenuation_corrected_differential_reflectivity(azimuth, range)float32...

- long_name :

- Rainfall attenuation-corrected differential reflectivity

- units :

- dB

- standard_name :

- radar_differential_reflectivity_hv

- applied_bias_correction :

- 3.7371335

[397100 values with dtype=float32]

- attenuation_corrected_differential_reflectivity_lag_1(azimuth, range)float32...

- long_name :

- Differential reflectivity estimated at lag 1 corrected for rainfall attenuation.

- units :

- dB

- standard_name :

- radar_differential_reflectivity_hv

- applied_bias_correction :

- 3.73713

[397100 values with dtype=float32]

- attenuation_corrected_reflectivity_h(azimuth, range)float32...

- long_name :

- Rainfall attenuation-corrected reflectivity, horizontal channel

- units :

- dBZ

- standard_name :

- equivalent_reflectivity_factor

- applied_bias_correction :

- 1.8

[397100 values with dtype=float32]

- censor_mask(azimuth, range)int32...

- long_name :

- Censor Mask

- units :

- 1

- flag_masks :

- [ 1 2 4 8 16 32 64 128 256 512 1024 2048]

- flag_meanings :

- horizontal_snr_below_noise_threshold vertical_snr_below_noise_threshold horizontal_ccor_below_ccor_threshold vertical_ccor_below_ccor_threshold horizontal_sqi_below_sqi1_threshold vertical_sqi_below_sqi1_threshold horizontal_sqi_below_sqi2_threshold vertical_sqi_below_sqi2_threshold horizontal_sigpow_below_sigpow_threshold vertical_sigpow_below_sigpow_threshold urhohv_below_rhohv_threshold censored_by_clutter_micro_suppression

- standard_name :

- radar_quality_mask

[397100 values with dtype=int32]

- classification_mask(azimuth, range)int32...

- long_name :

- Classification Mask

- units :

- 1

- flag_masks :

- [ 1 2 4 8 16]

- flag_meanings :

- second_trip third_trip interference clutter sunspoke

- standard_name :

- radar_quality_mask

[397100 values with dtype=int32]

- copol_correlation_coeff(azimuth, range)float32...

- long_name :

- Copolar correlation coefficient (also known as rhohv)

- units :

- 1

- standard_name :

- radar_correlation_coefficient_hv

[397100 values with dtype=float32]

- differential_phase(azimuth, range)float32...

- long_name :

- Differential propagation phase shift

- units :

- degree

- standard_name :

- radar_differential_phase_hv

[397100 values with dtype=float32]

- differential_reflectivity(azimuth, range)float32...

- long_name :

- Differential reflectivity

- units :

- dB

- standard_name :

- radar_differential_reflectivity_hv

- applied_bias_correction :

- 3.7371335

[397100 values with dtype=float32]

- differential_reflectivity_lag_1(azimuth, range)float32...

- long_name :

- Differential reflectivity estimated at lag 1

- units :

- dB

- standard_name :

- radar_differential_reflectivity_hv

- applied_bias_correction :

- 3.7371335

[397100 values with dtype=float32]

- mean_doppler_velocity(azimuth, range)float32...

- long_name :

- Radial mean Doppler velocity, positive for motion away from the instrument

- units :

- m/s

- standard_name :

- radial_velocity_of_scatterers_away_from_instrument

[397100 values with dtype=float32]

- mean_doppler_velocity_v(azimuth, range)float32...

- long_name :

- Doppler velocity, vertical channel

- units :

- m/s

- standard_name :

- radial_velocity_of_scatterers_away_from_instrument

[397100 values with dtype=float32]

- normalized_coherent_power(azimuth, range)float32...

- long_name :

- Normalized coherent power, also known as SQI.

- units :

- 1

- standard_name :

- radar_normalized_coherent_power

[397100 values with dtype=float32]

- normalized_coherent_power_v(azimuth, range)float32...

- long_name :

- Normalized coherent power, also known as SQI, Vertical Channel

- units :

- 1

- standard_name :

- radar_normalized_coherent_power

[397100 values with dtype=float32]

- reflectivity(azimuth, range)float32...

- long_name :

- Equivalent reflectivity factor

- units :

- dBZ

- standard_name :